Hi {{first_name | there}},

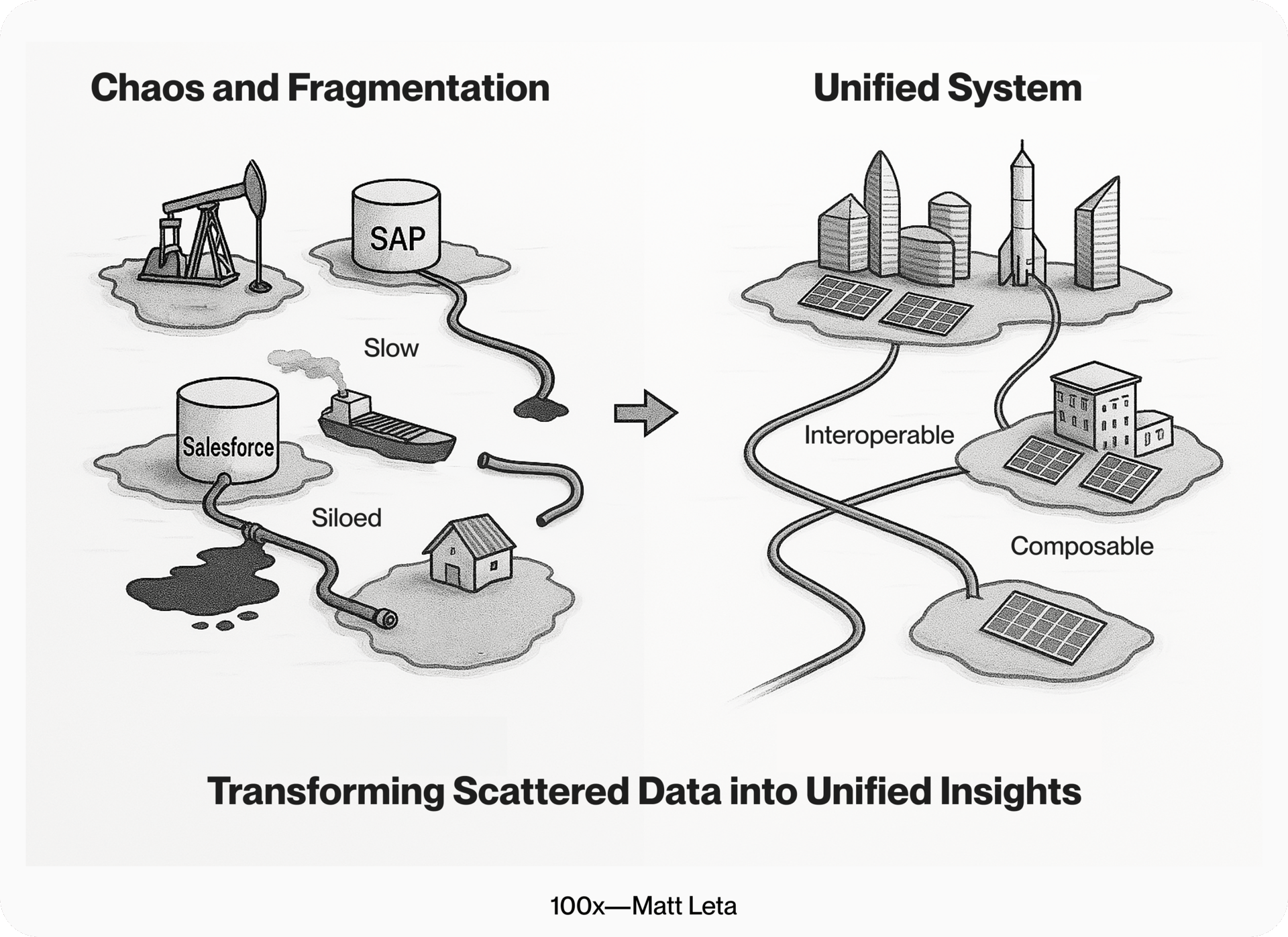

Most executives have heard the phrase "data is the new oil" countless times in boardrooms and conferences. Having data isn't enough though. Most enterprises are drowning in data while starving for insights.

I recently encountered an American automotive manufacturing company that perfectly illustrates this challenge. They had invested millions in digital tools, but their AI initiatives weren't delivering results. Their data was trapped in dozens of disconnected systems:

Marketing data lived in HubSpot

Sales data in Salesforce

Operations data in SAP

Customer service data in 5 different tools

Each department had its own "source of truth," and none of them agreed with each other. This fragmentation kills transformation efforts. When we finally unified their data fabric, their AI models suddenly became dramatically more effective.

The harsh reality of data fragmentation

Most organizations have no idea what data they actually have. Data gets scattered across:

Hundreds of SaaS tools (average company uses 150-200)

Legacy systems

Spreadsheets

Paper records

A Fortune 500 firm couldn't even answer how many customers they had. Different systems gave different counts, and nobody knew which one was right.

Disconnected systems create serious problems:

Scheduling tools don't sync with payroll systems

Customer service can't access real-time production data

Problems escalate unnecessarily

Integration becomes a requirement for sustainable transformation

Great results are impossible without connected software & data.

Rethinking data quality versus relevance

You can have the cleanest, most pristine data in the world and it might still be completely useless. We've been obsessing over data quality while missing something more important: relevance.

Smart organizations are making two massive shifts:

1. Generating relevant data

No more settling for whatever numbers are easy to collect

Asking tough questions about what data actually matters

Pharma companies now pour resources into human-specific studies

2. Auditing historical data

Not just checking if numbers add up

Asking whether preserved datasets matter anymore

Companies maintain decades of pristine records only to discover those datasets are irrelevant

You can polish a lump of coal all day long and it's never going to become a diamond. But start with a rough diamond (relevant but messy data), and AI can help cut it into something spectacular.

Stop asking "Is this data clean?" Start asking "Does this data actually tell us something useful?"

Understanding your data architecture options

Every business leader should understand these solutions to discern when the right or wrong ones are being suggested by tech teams.

Data warehouse

Like a library where every book must be properly cataloged first

Highly structured, organized data in relational tables

Ideal for pre-defined queries and reporting

Data lake

Like a smart storage closet

Toss in everything structured or unstructured

No meticulous labeling upfront required

Intelligent systems organize behind the scenes

Stores unstructured, raw data in original format

Data lakehouse

Combines raw flexibility of data lakes with warehouse-grade structure

No need to pre-define how data is stored (schema-on-write)

Structure it only when you use it (schema-on-read)

Can store both structured and unstructured data

Current adoption shows the trend:

55% of organizations use lakehouses for analytics

Projected to reach 67% in three years

85% of adopters power their AI initiatives through these platforms

How data lakehouses create real business value

Think of it as a sophisticated assembly line for your data, where each stage transforms raw information into business gold.

How raw data becomes real business value: a simple breakdown of the Data Lakehouse flow.

Ingestion layer

Acts like a powerful digital vacuum

Pulls in operational data, financial transactions, customer interactions

Includes IoT sensor readings and social media sentiment

Keeps data untouched in native format

Distillation and processing layers

Refine raw data into structured, usable formats

Align fragmented information

Customer records match transaction histories

Support tickets link to usage data

Marketing campaigns connect to actual outcomes

Processing layer

Runs complex queries

Powers AI models

Translates numbers into insights

Reveals behavioral patterns and inefficiencies

Insights and unified operations layers

Deliver actionable intelligence via dashboards

Monitor performance and manage workflows

Prevent your lake from becoming a data swamp

Building the infrastructure without boiling the ocean

Always start small and think big. Begin with your most valuable data sources and gradually expand.

Core principles

Make it accessible - Your data lake should be easy to query by both technical and non-technical users. Data is only valuable if people can actually use it.

Keep it clean - Implement strong data governance from day one. Bad data is worse than no data because it leads to false confidence.

Think real-time - In today's world, week-old data might as well be ancient history. Design your infrastructure for real-time processing from the start.

Plan for scale - Your data needs will grow exponentially. Choose solutions that can grow with you without requiring a complete rebuild.

Security considerations

Centralizing data creates opportunities and risks

Explore robust security measures

Don't let security become an excuse for data silos

Modern data lakes offer granular access controls, encryption, and audit trails

Avoiding the predictable pitfalls

Watch out for these common mistakes:

"Build it and they will come" fallacy - Show clear value and make it easy to access

"Perfect data" trap - Start with what you have and improve as you go •

"Technology first" mistake - Focus on solving business problems, not implementing technology

Data quality issues - Garbage in, garbage out requires governance from day one

Governance problems - Establish clear ownership and policies with cross-department representation

Siloed data ownership - Data must flow across teams, not stop at departmental borders

Your immediate action plan

Tomorrow, start here:

Confirm an executive sponsor

Map your tech ecosystem and AI readiness with brutal honesty

Use proven frameworks to locate opportunities within six weeks

Month 1: Know where you stand

Map every system in your organization

Identify your champions (rarely the ones with fancy titles)

Look for people building workarounds because existing systems don't cut it

Month 2: Build your strategy

Turn insights into action with specific targets

Scale gradually and communicate how this changes daily work

Document hours spent on tasks that could be automated

Month 3: Lay the foundation

Clean up your most critical software

Focus on tools and datasets that drive highest-value decisions

Target those that are relatively quick to update

One final thought

Start small, move fast, learn constantly. Start now and do not stop. Budgets don't determine which organizations win. The ones who get ahead learn and adapt the fastest.

Tool consolidation reduces chaos. Fewer tools, tighter integration, better results. The best organizations simplify workflows, unify their systems, and operate from the same source of truth.

When you've unified your data fabric and built the right foundation, you're ready for whatever comes next.

Much Love,

Matt

At Lighthouse, we love featuring fresh perspectives from our community of AI, tech, and innovation leaders. Got insights to share? Just reply to this email—I’d love to hear from you!